Introduction to Computer Security

Thinking about Security

Paul Krzyzanowski

January 27, 2024

Computer Security

Computer security, often called cybersecurity, is about keeping computer systems, the programs they run, and the data they use, safe. It addresses three areas: confidentiality, integrity, and availability. These three pillars are known as the CIA triad (sometimes called the AIC triad to avoid any confusion with the U.S. Central Intelligence Agency).

Confidentiality

Confidentiality refers to keeping data hidden from anyone who should not have access to it. It can refer to an application or operating system disallowing you from reading the contents of a file or it may refer to data that is encrypted so that you can see it but cannot make sense of it. In some cases, confidentiality can also deal with hiding the very existence of data, computers, or transmitters (this is technically secrecy, as we will soon see). Sometimes simply knowing that two parties are communicating may be useful information for an adversary. RFC 4949, the Internet Security Glossary, defines data confidentiality as:

“The property that information is not made available or disclosed to unauthorized individuals, entities, or processes [i.e., to any unauthorized system entity].”

Confidentiality is the traditional focus of computer security and is usually the first thing that comes to mind when people think about the subject, often with the idea that we can achieve security by encrypting all the important data.

Confidentiality is often confused with privacy. Privacy is your ability to limit what information you permit to be shared with others while confidentiality provides the ability to conceal messages or to exchange messages without others who do not have authorization being able to see them. Privacy controls how others can use information about you.

Privacy focuses on personal information and protecting personally identifiable information (PII). It may include anonymity, which can strip off personally identifiable information from data or communications sessions. For instance, you may want to keep your identity hidden when contacting a crisis or crime reporting center. Researchers may want to analyze medical data but shouldn’t see the identities of the people to whom the data belongs.

RFC 4949, the Internet Security Glossary, citing the U.S. HIPAA Privacy Act of 1964, defines privacy as:

The right of an entity (normally a person), acting in its own behalf, to determine the degree to which it will interact with its environment, including the degree to which the entity is willing to share its personal information with others.

Secrecy refers to the state of keeping something hidden or not publicly known. It is about intentionally concealing information from others.

The differences among confidentiality, privacy, and secrecy are difficult to remember since we use often use these terms interchangeably when speaking colloquially. Also, note that the terms “secret” and “confidential” may simply refer to different security clearance classification levels, which often used in government work1. The distinctions, particularly between confidentiality and secrecy, can be fluid. Let’s use some working definitions:

- Secrecy

- refers to information where there is no intention of ever disclosing it outside of the individual or the organization. For example, a company may have trade secrets. In some definitions, secrecy refers to the situation where a threat actor is not even aware that the information exists. Secrecy is about hiding the very existence of information. For example, adversary should not know that there is a secret plan to overthrow the government. If the plan was confidential, that implies that the adversary knows there is a plan but does not know what that plan is. While secrecy can be a means to achieve confidentiality, not all confidential information needs to be a secret. Some confidential information may be known to a limited group of authorized individuals but not necessarily hidden from them.

- Privacy

- refers to a human’s right to control their personal information and how it is collected, used, disclosed, and shared by others. This includes our medical records and financial data. The data is not secret but we want it to be shared only with appropriate entities – ideally in ways we understand and can revoke if necessary.

- Confidentiality

- refers to the rules and techniques for handling trusted data. Confidentiality can be thought of as systems-level solutions (policies and mechanisms) to ensure that privacy is preserved.

The need for privacy is a reason for implementing confidentiality. Privacy has become increasingly more difficult to attain. Data that never was truly private, such as property sales, can be more easily aggregated as it is now readily available on the network. A lot of “free” services collect data on your searches, browsing, posts, likes, etc. This includes Facebook, X, LinkedIn, Google, Instagram, TikTok, and many, many others. It includes every ad-supported service. This observation led to the saying, “if you’re not paying for the product, you are the product”. 2 Building a more complete profile of an individual is also possible by correlating data sets from multiple sources. Companies make a business doing this and selling this correlated information.

Integrity

Integrity is ensuring that data and all system resources are trustworthy. This means that neither of them are maliciously or accidentally modified. There are three categories of integrity:

Data integrity is the property that data has not been modified or destroyed in an unauthorized or accidental manner. Someone cannot modify, overwrite, or delete your files. When communicating, message integrity is the same thing: the contents of a message will not be altered or deleted.

Origin integrity is the property that a message has been created by its author and not modified since then. It allows one to associate data with its creator. It also includes authentication, techniques that allow a person or program to prove their identity. For example, are you really communicating with your bank? If you send it a message and the bank can respond with a message identifying itself and origin integrity is present, then you can be sure that the message came from the bank.

System integrity is the property that the entire system is working as designed, without any deliberate or accidental modification of data or manipulation of processing that data.

In many cases, integrity is of greater value than confidentiality. I might not care if you see me transfer money between two of my bank accounts but I want the system to ensure that you cannot impersonate me and do the same … or transfer my money to a different account.

Availability

Availability deals with systems and data being available for use. We want a system to operate correctly on the correct data (integrity) but we also want that system to be accessible and capable of performing to its designed specifications. For example, a denial of service (DoS) or a distributed denial of service (DDoS) attack is an attack on availability. The attack does not access any data or modify the function of any processes. However, it either dramatically slows down or completely disallows access to the system, hurting availability.

We want all three of these properties – confidentiality, integrity, and availability – in a secure system. For example, we can get confidentiality and integrity simply by turning off a computer but then we lose availability. Integrity on its own is useful, but does not provide the confidentiality that is needed to ensure privacy. Confidentiality without integrity is useless since you may access data that was modified without your knowledge or use a program that is manipulating the data in a manner that you did not intend.

Thinking about security

Algorithms, cryptography, and math on their own do not provide security. Security is not about simply adding encryption to a program, enforcing the use of complex passwords, or placing your systems behind a network firewall. Security is a systems issue and is based on all the components of the system: the hardware, firmware, operating systems, application software, networking components, and the people. The entire supply chain is responsible for providing security. If you use an non-secure library, a hacked compiler, or a buggy processor, the security of your system may be compromised.

“Security is a chain: it’s only as secure as the weakest link” — Bruce Schneier

Consider the problems that arose from the Spectre vulnerability that was discovered in 2018 and affected the security of practically every modern microprocessor designed over the past several decades. A low-level side-effect in the way a processor operates could lead to a JavaScript exploit that allows a website to read a browser’s memory, including data stored from visits to other websites.

As another example, Log4J is a widely-used Java-based library for logging events. It is used in 35,863 Java packages. In December 2021, the Alibaba Cloud Security Team discovered a bug in the library that enables arbitrary code execution. Because the library has been around for 21 years and used in tens of thousands of packages, this bug has been described as the biggest, most critical vulnerability of the past decade. Any of those 35,863 Java packages may be vulnerable. The exploit was relatively easy to carry out since software is often good at logging bad inputs. In this case, the attacker could issue a request that would expand into a JNDI (Java Naming and Directory Interface) lookup, which could execute arbitrary code on the target machine. The victims didn’t write bad code; they just happened to use a library that could be exploited.

Security needs are based on people, their relationships with each other, and their interactions with machines. Hence, security also includes processes, procedures and policies. Security must also address the detection of intruders and have the ability to perform forensics; that is, figure out what damage was done and how.

Security is difficult. If it was not, we would not see near-daily occurrences of successful attacks on systems, including high-value systems such as banks3, governments4, hospitals5, large retailers6, and high-profile websites7. Unfortunately, security is often an afterthought, something that companies attempt to add onto a system after it is developed.

Examples: data breaches

Exfiltration is the term for the unauthorized transfer of data; that is, stealing data. The ability for an unauthorized user to access such data is a data breach. Some large data breaches included:

- COMB - Compilation Of Many Breaches - 2017, November 2023

- This is an amalgamation of multiple breaches. In 2017, a compilation of multiple breaches, containing 1.4 billion user credentials, was leaked. On February 2, 2023, COMB was leaked on a hacking forum and contained billions of user credentials from past leaks from Netflix, LinkedIn, and many others. This breach contains 3.27 billion credentials, more than double the number leaked in the 2017 breach compilation.

- CAM4 - March 2020

- The adult video streaming website CAM4 had a misconfigured ElasticSearch database that made it easy for anyone to access its data. Researchers were able to download over 10 billion records, from which they found 11 million email addresses, including full names, chat transcripts, and payment logs. It’s not clear if hackers downloaded any of this data but it’s incredibly sensitive information that could be useful for blackmailing.

- Aadhaar database - July 2023

- The Aadhaar database is the Indian government’s comprehensive identification system that provides each Indian resident with a unique identification number and stores demographic and biometric information about residents. The personal information of over 810 Indian residents, including names, phone numbers, addresses, Aadhaar, and passport details, were accessed and made available for sale via the dark web.

- Verifications.io – February 2019

- The email validation service verifications.io exposed 763 million email addresses in a MongoDB database that was left exposed without a password. Many of those records included names, phone numbers, dates of birth, and genders.

- Aadhaar database – March 2018

- The 2023 breach was another attack on the Aadhaar database. In 2018, the personal information of more than 1.6 billion Indian citizens stored in the world’s largest biometric database leaked via an open website. Names, unique identity numbers, bank details, photos, thumbprints, retina scans of residents were stolen.

- Yahoo – October 2017

- Three billion user accounts were stolen from Yahoo’s servers. This included usernames, security questions, and answers to those questions.

- Alibaba - July 2022

- 1.1 billion customer records were stolen from Alibaba’s cloud hosting servers. This included customer names, phone numbers, physical addresses, and criminal records.

- First American Financial - 2019, 2023

- In May 2019, information about 885 million users was taken from First American Financial Corporation. This included bank account data, Social Security numbers, wire transaction information, and mortgage data. The bank was attacked again in December 2023, where the attackers stole system data. This was around the same time that they reached a $1 million settlement over the 2019 data breach.

- Marriott - November 2018

- In November 2018, customer information about 500 million Starwood hotel customers spending the period between 2014 and 2016 was stolen. This included names, contact information, passport numbers, and other personal information. 100 million of those customers also had credit and debit card numbers stolen.

- Adult Friend Finder – October 2016

- In October 2016, 20 years of data from six databases operated by adult friend Finder was stolen. This included names, email addresses, and passwords. Similar to the more recent CAM4 open database, this breach may be particularly useful for future blackmailing Since we can assume that most users of these services don’t want their use of the service to be made public.

Examples: attacks

In December 2022, attack against software produced by SolarWinds were discovered. In this series of attacks, a suspected Russian hacking group known as Nobelium or Cozy Bear targeted government agencies and thousands of businesses that used SolarWinds Orion software to manage IT resources8. This is an example of a software supply chain attack. The attackers modified a DLL file that was part of a software update for the Orion platform sold by SolarWinds. The software contains millions of lines of code. Of these, 4,032 lines were rewritten and distributed to customers as an update that contained a secret backdoor. Around 18,000 customers, including six U.S. Government departments, use this software. By breaking into SolarWinds, they were able to spread their malicious software to all these customers of SolarWinds. Microsoft called this “the largest and most sophisticated attack the world has ever seen,” estimating that more than a thousand engineers worked on developing this exploit. Part of this hack allowed attackers to gain access to Microsoft 365 mail accounts. The attack was detected in December 2022 but might have started as far back as January 2019.

Kaseya is a Florida-based company that develops software for managing networks and other IT infrastructure. Like SolarWinds, it is not a household name. Attackers were able to bypass the security of their remote monitoring and management tool and use it to distribute ransomware to the company’s customers. This is another example of a supply chain attack. Between 800 and 1,500 businesses were affected. For example, 800 stores in the Coop supermarket chain in Sweden had to shut down for a week while they rebuilt their systems. The REvil ransomware group took credit for this and asked for a $70 million ransom but Kaseya did not pay.

A ransomware attack targeting the Colonial Pipeline took place in May 2021. This company carries gasoline, diesel, and jet fuel from Texas. It carries about 45% of all fuel consumer in the east coast of the U.S. The attack led the company to stop all operations in an attempt to try to contain the attack. It cost the company $4.4 million to recover the assets and resume operations. Attackers were able to carry this account because they got hold of a single compromised password to a virtual private network that gave them access to the company’s internal network. This was an account that was no longer in use but still gave them access to the network.

We’ve seen the example of the Log4J vulnerability. A supply chain attack occurred with the popular NPM JavaScript package. In October 2021, the

USParser.jslibrary was found to have been modified. This parses data from user-agent strings. Another library in the package, coa (command-option-argument), was maliciously modified in November 2021. The package is downloaded approximately nine million times a week and is used in almost five million open source repositories on GitHub and in countless other places. Shortly after that, another component in the library, rc, was also discovered to have been maliciously modified. This component is downloaded around 14 million times a week. These components are used throughout the world, including in software produced by or run by large companies such as Apple, Amazon, Microsoft, Slack, and Reddit. The modifications in the software included the addition of a cryptominer for mining cryptocurrency and a password stealer.In late 2021, cyberattacks hurt real-world supply chains (versus the supply chain of components used to create and run software). One of the largest cream cheese manufacturers in the U.S. experienced a cyberattack in October 2021 on its plants and distribution centers. This the production and distribution of cream cheese, causing delis and restaurants to experience shortages. Similarly, Ferrara Candy, the company that makes 85% of the candy corn in the U.S. was attacked by ransomware in October 2021. JBS Meats is one of the largest meat producers. A ransomware attack in May 2021 stopped the global meat supply chain across over 20 countries. They ended up paying a ransom of $11M.

Canada’s Newfoundland and Labrador health-care system was attacked and taken out of service for several days. Ireland’s public health system experienced a cyberattack in May 2021. The hospital was running tens of thousands of outdated Windows 7 systems. The CyberPeace Institute reported that 95 known cyberattacks have been conducted on the healthcare sector in the 18 months between June 2, 2020, and December 3, 2021. These occurred at a rate of 3.8 per week and and in 35 countries. The vast majority of these have been ransomware attacks9.

In June 2021, hackers broke into the New York City Law Department’s computers. These systems hold medical records, personal data for thousands of city employees, and identities of minors charged with crimes. The attacker obtained a stolen email address and password in order to access this data.

Twitch, a game streaming service owned by Amazon, suffered a major data breach in 2021 due to a server configuration mistake. This enabled hackers to steal the entire source code and post it as a 125 GB torrent that contains the source and its commit history.

In 2018, the city of Atlanta, Georgia, had its computers frozen by ransomware. New Haven, Connecticut was attacked by ransomware in October of that year. In March 2019, municipal computer systems in Jackson County, Georgia were rendered inoperable by ransomware. Georgia paid $400,000 in ransom. This was followed by a ransomware attack on Albany, New York. The ransomware attacks continued: Augusta, Maine, Greenville, North Carolina, and Imperial County, California were all hit in April. Baltimore, Maryland was hit by ransomware in May of 2019 and Riviera Beach, Florida was attacked in June, paying the attackers $600,000 in Bitcoin.

Unreleased episodes of the Orange Is The New Black Netflix TV series were posted online by The Dark Overlord hacking group even after Netflix paid a $50,000 ransom. Serbian police later arrested a suspected member of the group but the group continued to assert its existence.

In 2016, ransomware spread through email attachments targeted Microsoft Windows systems. It infected the master boot record of the file system and encrypted the file system, preventing Windows from booting and users from recovering files on the computer. It installed a boot-level program that would demand payment in Bitcoin.

In June 2017, a variation of Petya malware surfaced and attacked banks, newspapers, companies, and government offices in Ukraine. These infections spread to Australia, France, Germany, Italy, Poland, Russia, the United Kingdom, and the US. Even through the malware purported to be ransomware, it actually destroyed files. Damage from the malware was estimated to be in excess of $10 billion. The virus propagated through an exploit called EternalBlue, that was developed by the U.S. National Security Agency (NSA). The CIA attributed the creation and deployment of NotPetya to Russia’s GRU spy agency but the actual authors are unknown.

77 million Sony PlayStation Network accounts were hacked, causing the site to go down for 1 month and resulting in a $171 million loss. Twelve million of those accounts had unencrypted credit card numbers

Iranian nuclear power plants, which were attacked in 2010 by Stuxnet, a computer worm that targeted Windows systems running Siemens software and compromised connected programmable logic controllers (PLCs) to destroy centrifuges.

In 2011, RSA Security was breached. This is a company that was created by the creators of the first public key encryption algorithm, sold security products, including SecurID authentication tokens, and hosts security conferences. Data on around 40 million users was compromised. The attacker sent two different targeted phishing emails over a two day period and attack used a Flash object embedded in an Excel file. We do not know exactly what data was stolen, although RSA warned customers that stolen data could be potentially used to reduce the effectiveness of their authentication product.

Yahoo, who in 2016 announced that over a billion accounts were compromised in 2013 and 2014, revealing names, telephone numbers, dates of birth, encrypted passwords and unencrypted security questions that could be used to reset a password.

TJX, the parent company of TJ Maxx, announced in March 2007 that it had 45.6 million credit cards stolen over a period of 18 months. Court filings later revealed that at least 94 million customers were affected. The incident cost the company $256 million.

Sony Pictures was hacked in 2014 and personal information about employees, their families, salaries, email, and unreleased films was disclosed.

744,408 BTC ($350 million at the time) was stolen in 2010 from one of the first and largest Bitcoin exchanges, Japan’s Mt. Gox. In 2016, more than $60M worth of bitcoin (119,756 BTC) was stolen from Bitfinex.

The October 2016 distributed denial of service (DDoS) attack on NDS provider Dyn was the largest of its type in history and made a vast number of sites unreachable.

In 2016, MedSec, a vulnerability research company focused on medical technology, claimed it found serious vulnerabilities in implantable pacemakers and defibrillators.

Equifax, the credit reporting agency, had an application vulnerability that enabled hackers to steal names, addresses, social security numbers, and other authenticating information of 143 million customers.

In 2018, Marriott reported that contact information, passport numbers, travel information, and credit cards of 100 million customers were stolen over the past four years. Marriott reported that credit card data was encrypted but it is possible that the decryption keys were stolen as well.

The list can go on ad infinitum. Serious breaches occur weekly and show no sign of abating.

Hackbacks

Some hacks are retaliatory or preventive: it’s the good guys using cyberattacks against the hackers. A hackback refers to a retaliatory attack against the attacker. It can involve destroying the attacker’s system, disabling the source of the attack, or deleting or destroying stolen data. For example, in 2019, police in France took control of a server that was used to spread malicious software to mine cryptocurrency on over 850,000 computers. As with regular warfare, some attacks are preemptory. Also in 2019, the U.S. launched a cyberattack against Iran to ensure that it would not later attack oil tankers.

Complexity

At a software level, security is difficult because so much of the software we use is incredibly complex. Microsoft Windows 10 has been estimated to comprise approximately 50 million lines of code. Windows 11 is estimated to contain between 60 and 100 million lines of code. A full Linux Fedora distribution comprises around 200 million lines of code, and all Google services have been counted as taking up around two billion lines of code. It is not feasible to audit all this code and there is no doubt that there are many bugs lurking in it, many of which may have an impact on security. In 2021, 73,700 commits to the kernel were made from 4,421 different authors. 3.2 million lines of new code were added and over 1.3 million lines of code were removed. Even if you could audit the code, the code base would be different by the time you are done. Contributions to the kernel came from over 3,000 authors. How do you have confidence that none of them are malicious?

But security is about systems, not a single operating system or program. Systems themselves are complex with many components ranging from firmware on various pieces of hardware to servers, load balancers, routers, networks, clients, and other components. Systems often interact with cloud services and programs often make use of third-party libraries (you didn’t write your own compiler or JSON parser). There are complex interaction models and asynchronous events that make it essentially impossible to test every possible permutation of inputs to a system. Moreover, all components are often not under the control of one administrator. A corporate administrator may have little or no control of the software employees put on their phones or laptops or the security in place at various cloud services that might be employed by the organization (e.g., AWS, Slack, Dropbox, Office365). Security must permeate the system — all of its components: hardware, software, networking, and people.

People themselves are a huge — and dominant – problem in building a secure system. They can be careless, unpredictable, overly trusting, bribable, and malicious. Most security problems are not due to algorithms or bad cryptography but to the underlying processes and people. The human factor, and social engineering, in particular, is the biggest problem and top threat to systems. Social engineering is a set of techniques aimed at deceiving humans to obtain needed information. It often relies on pretexting to obtain the needed data, where a person or a program pretends to be someone else .

Security System Goals

We saw that computer security addressed three areas: confidentiality, integrity, and availability. The design of security systems also has three goals.

Prevention. Prevention aims at preventing attackers from violating your security policy. Implementing this requires creating mechanisms that users cannot override. A simple example of prevention requiring software to accept and validate a password. Without the correct password, an intruder cannot proceed.

Detection. Detection attempts to detect and report security attacks. It is particularly important as a safeguard when prevention fails. Detection will allow us to find where the weaknesses were in the mechanism that was supposed to enforce prevention. Detection is also useful in detecting active attacks even if the prevention mechanisms are working properly. It can allow us to know that an attack is being attempted, identify where it is originating from, and what it is trying to do.

Recovery. Recovery has the goals of stopping any active attack and repairing any damage that was done by an attack. A simple but common example of recovery is restoring a system from a backup. Recovery includes forensics, which is the gathering of evidence to understand exactly what happened and what was damaged.

Policies & Mechanisms

Policies and mechanisms are at the core of designing secure systems 10. A policy specifies what is or is not allowed. For example, only employees in the human resources department have access to certain files or only people in the IT group can reboot a system. Policies can be expressed in natural language, such as a policy document. Policies can be defined more precisely in mathematical notation but that is rarely useful for most humans or software. Most often, they will be described in some precise machine-parsable, human-readable policy language and applied to specific components of the system. Such a language provides a high degree of precision along with the ability to be readable by humans. The Web Service Security Policy Language is an example of a security policy language that defines constraints and requirements for SOAP-based web services, although W3C web security standards don’t seem to be getting much active development.

A mechanism refers to the components that implement and enforce policies. For example, a policy might dictate that users have names and passwords. A mechanism will implement the interface for asking for a password and authenticating it.

Security Engineering

We are interested in examining computer security from an engineering point of view. At the core, we have to address security architecture: how do we design a secure system and identify potential weaknesses in that system? Security engineering is the task of implementing the necessary mechanisms and defining policies across all the components of the system. The architecture specifies what needs to be built and the engineering determines how it will be built.

Making compromises is central to any form of engineering. For example, a structural engineer does not set out to build the ultimate earthquake-proof and storm-proof building when designing a skyscraper in New York City but instead follows the wind load recommendations set forth in the New York City Building Code. Similarly, there is no such thing as an unbreakable or fireproof vault or safe. Safes are rated by how much fire or attacking they can sustain. For instance, a class 150 safe can sustain an internal temperature of less than 150° F (66° C) and 85% humidity for a specific amount of time (e.g., 1 hour). A class TL-30 combination safe will resist abuse from mechanical and electrical tools for 30 minutes. Watches are another example. No watch is truly waterproof. Instead, they are rated for water resistance at a specific depth (pressure), although watches such as the Rolex Deepsea Challenge are waterproof for all practical purposes — but even the Deepsea Challenge is rated not to infinite depth but to 11,000 meters (36,090 feet).

Engineering tradeoffs relate to economic needs. Do you need to spend over $25,000 on the Rolex Deepsea Challenge or will the Sea-Dweller, which is rated for only 1,200 meters but costs only around $13,250, be good enough? All safes can be opened. A safe with a rating of TL-15 from Underwriters Laboratories has a door that successfully resists entry for a working time of 15 minutes while a safe rated TL-30 resists entry for 30 minutes when attacked with various hand tools, lock picking tools, portable electric tools, cutting wheels, power saws, grinders, and carbide drills. Do you buy an AMSEC CF4524 safe for $6,847.50, which is rated TL-30, or spend $400 less and get the same-size, identical-looking AMSEC CE4524 that is only rated TL-15?

The same applies to computer security. No system is 100% secure against all attackers for all time. If someone is determined enough and smart enough, they will get in. The engineering challenge is to understand the tradeoffs and balance security vs. cost, performance, acceptability, and usability. It may be cheaper to recover from certain attacks than to prevent the attack.

We want to secure our systems … but what do we secure them against or from whom? There is a wide range of possible attackers. For example, you may want to secure yourself against:

Yourself accidentally deleting important system files.

Your colleagues, so they will not be able to look at your files on a file server.

An unhappy system administrator.

An adversary trying to find out about you and get personal data.

A phone carrier tracking your movements.

An enemy who plans to throw a grenade on your computer.

The NSA or other government spy agencies.

Risk analysis

Protecting yourself from accidentally destroying critical system files is a far easier task than defending your system from the NSA if the agency is determined to access your computer. Assessing a threat is called risk analysis. We want to determine what parts of the system need to be protected, to what degree, and how much effort and expense we should expand into protecting them.

As part of risk analysis, we may need to consider laws to assess whether any types of security measures are illegal. That can restrict how we design our system. For example, certain forms of cryptography were illegal to export outside the U.S. and some restrictions still exist.

We also need to consider user acceptability or customs. Will people put up with the security measures, try to bypass them, or revolt altogether? For instance, we may decide to authenticate a user by performing a retina scan (which requires looking into an eyepiece of a scanner) along with a DNA test, which requires swabbing the mouth and waiting 90 minutes when using a solid-state DNA testing chip. While these mechanisms are proven techniques for authentication, few people would be willing to put up with the inconvenience. On the systems side, one would also need to consider need for and the expense of any special equipment needed. We thus need to balance security with effort, convenience, and cost.

Economic incentives

Securing systems has a cost: it requires time and effort. Organizations may not be willing to spend the money for extreme security measures because it simply does not make economic sense for them.

Stores rarely suffer any financial consequences if customer credit card data is stolen, so why spend the money to secure the information better?

If your business suffers a breach because of a bug in a Microsoft operating system, it might be hugely disruptive to you and hurt your business. However, Microsoft is not responsible for dealing with your loss since you agreed with their end-user license agreement, the EULA, before installing or using the software. It specifically states:

“You may not under this limited warranty, under any other part of this agreement, or under any theory, recover any damages or other remedy, including lost profits or direct, consequential, special, indirect, or incidental damages.”

Can Microsoft, or any other company, design software with fewer bugs? Sure, but there will be a tradeoff. It would take more time to test the software, resulting in significant delays to its release. Features would be reduced to keep the amount of testing needed more manageable. It’s not clear that customers will be more satisfied. The same applies to protection against data breaches. Companies are not eager to spend millions of dollars on hardening their infrastructure if they have insurance against cyberattacks and believe that there will not be a lasting loss to their reputation or ability to run their business.

In some cases, security is important but employee incentives are not aligned with the needs of the organization. An example is the U.S. Central Intelligence Agency (CIA), which created a “workplace culture in which the agency’s elite computer hackers ‘prioritized building cyber weapons at the expense of securing their own systems’”. This led to the theft, and subsequent Wikileaks publication, of the CIA’s hacking tools, called Vault 7. It was the biggest unauthorized disclosure of classified information in the history of the CIA.

Attacks and threats

When our security systems are compromised, it is because of a vulnerability.

Vulnerabilities

A vulnerability is a weakness in the security system. It could be a poorly defined policy, a bribed individual, or a flaw in the underlying mechanism that enforces security. Vulnerabilities tend to be a big focus in computer security classes. Examples of vulnerabilities include:

- Lack of input buffer size checks

- Enables entered data to corrupt other parts of the program, sometimes permitting code injection

- Input validation bugs

- Poor input validation may allow attackers to enter data that will be treated as commands or database queries

- Protocol bugs

- Exploiting buggy protocols may enable an attacker to redirect traffic or disable encryption

- Unprotected files

- Unprotected configuration files in a system can allow an attacker to change them to alter network routes, start malicious programs when the system boots up, or change search paths for programs.

Attack vectors

The term attack vector refers to the specific technique that an attacker uses to exploit the vulnerability and compromise the security of a system. It is the way in which an attack is carried out. Common attack vectors include:

- Phishing

- sending an email that is disguised as a legitimate message, causing the recipient to take action.

- Keylogging

- Installed hardware or software that collects everything a user types

- Modification of data

- Modifying routing tables, configuration files, or anything that may cause messages to be rerouted.

- DDoS (Distributed Denial of Service)

- Disrupt a server’s ability to process requests

- Buffer overflow

- Exploit an input buffer size validation vulnerability to overflow a buffer and cause a program to behave differently.

Exploits & attacks

An exploit is the specific software, input data, or instructions that take advantage of a vulnerability. An exploit may be the technique of sending an email with an attachment that contains a Microsoft Word document with a malicious script in it that will take advantage of a vulnerability in Word.

An attack (sometimes called a cyber-attack) is the active use of an exploit to get around the security policies and mechanisms that are in place and steal data, run code on a computer, snoop on network traffic, or disable the system. For example, trying common passwords to log into a system is an attack.

An attack surface refers to the sum of possible attack vectors in a system. It is the total number of places in a system that an attacker might use to try to get into the system. It does not mean that these are vulnerabilities. In building secure systems, our goal is to be aware of the attack surface of our environment so we can assess the likelihood of various attacks and build defenses. We would also, if possible, like to reduce the size of the attack surface. The smaller the attack surface, the fewer places there are for an attacker to strike, and the fewer areas there are for us to inspect and guard.

A threat, threat actor, or threat agent is how we refer to the potential adversary (person or thing) who has the potential to attack the system. A threat may attack. The key point is that a threat refers to the possibility of an attack rather than the attack itself. An attacker was a threat. A threat will not necessarily attack.

Threats fall into four broad categories 11:

Disclosure: Unauthorized access to data, which covers exposure, interception, interference, and intrusion. This includes stealing data, improperly making data available to others, or snooping on the flow of data.

Deception: Accepting false data as true. This includes masquerading, which is posing as an authorized entity; substitution or insertion of includes the injection of false data or modification of existing data; repudiation, where someone falsely denies receiving or originating data.

Disruption: Some change that interrupts or prevents the correct operation of the system. This can include maliciously changing the logic of a program, a human error that disables a system, an electrical outage, or a failure in the system due to a bug. It can also refer to any obstruction that hinders the functioning of the system.

Usurpation: Unauthorized control of some part of a system. This includes theft of service or theft of data as well as any misuse of the system such as tampering or actions that result in the violation of system privileges.

Many attacks are combinations of these threat categories. For example,

- Snooping, or eavesdropping, is the unauthorized interception of information

- It is a form of disclosure and is countered with confidentiality mechanisms.

- Modification or alteration, is the making of unauthorized changes to information

- This is a form of deception, disruption or usurpation and is countered with integrity mechanisms.

- Masquerading, or spoofing, is the impersonation of one entity by another

- It is a form of deception and usurpation and is countered with integrity mechanisms.

- Repudiation of origin is the false denial that an entity sent or created something.

- It is a form of deception and may be a form of usurpation and is countered with integrity mechanisms.

- Denial of receipt is the false denial that an entity received data or a message

- It is a form of deception and is countered with integrity and availability mechanisms.

- Delay is the temporary inhibition of a service

- It is a form of disruption and may be a form of usurpation. It is countered with availability mechanisms.

- Denial of service is the long-term inhibition of a service

- It is a form of disruption and may be a form of usurpation. It is countered with availability mechanisms.

Risks introduced by the Internet

“The internet was designed to be open, transparent, and interoperable. Security and identity management were secondary objectives in system design. This lower emphasis on security in the internet’s initial design not only gives attackers a built-in advantage. It can also make intrusions difficult to attribute, especially in real-time. This structural property of the current architecture of cyberspace means that we cannot rely on the threat of retaliation alone to deter potential attackers. Some adversaries might gamble that they could attack us and escape detection.”

— William J. Lynn III, Deputy Defense Secretary, 2010

The Internet provides us with a powerful mechanism for communicating with practically any other computer in the world. It also provides a way for attackers to target systems from practically any other computer in the world. Even though the Internet was a direct descendent of the ARPANET, a project funded by the U.S. Defense Advanced Research Projects Agency (DARPA), its design priority was to interconnect multiple disparate networks and create a decentralized architecture. Security was not a design consideration of the Internet.

The network itself is “dumb” by design. it is responsible for routing packets from one system to another. As we’ll see later, even this is problematic since no one entity owns the Internet or is responsible for defining the routing rules. The Internet does not guarantee reliable, ordered, or secure delivery. The intelligence of the Internet is at the endpoints, in software. Protocols such as TCP run on endpoint computers to provide ordered, reliable delivery of messages if programs need that. If security mechanisms are needed, it is expected that they, too, will be implemented at the endpoints.

Any system can join the Internet and start sending and receiving messages. Systems can even offer routing services, allowing new networks to extend the Internet. This provides opportunities for redirecting messages and impersonating other systems.

The Internet also makes it easy for bad actors to hide: they can be anywhere in the world and they have no obligation to announce their presence or register themselves in any way. For example, in November 2008, a computer worm named Conflicker was detected on Microsoft Windows systems. This was malicious software that propagated to other systems by taking advantage of bugs in Microsoft Windows and testing common passwords using a dictionary attack12. Each system that was successfully targeted by Conflicker became part of a huge botnet, where it would get instructions from a remote command and control server. Conflicker infected millions of computers in over 190 countries, including those at the United Kingdom Ministry of Defence, the Bundeswehr (German armed forces), the French Navy, and the Manchester Police Network. It was one of the largest infections of software in the world, and amazingly, even over ten years later, we do not know who created it. It appears to have originated in Ukraine but the author or authors are unknown.

Advantages of the Internet for bad actors

Computer versus real-word risks

Computer threats mimic rear-world threats. Systems are subject to theft, vandalism, extortion, fraud, coercion, and con games. The motivations are the same but the mechanisms are different.

In the physical world, for most people and companies, security risks are usually low. Most people are not attacked and most companies are not victims of espionage. It is statistically unlikely that you will be mugged or that your house or car will be broken into. The number of bad actors in the world, thankfully, is a tiny percentage of the population. They need to have a reason to target you or, if they are acting randomly, there are only so many people, houses, and cars that they can attack over a period of time. With each attack, there is a significant risk of getting caught, which provides a certain degree of disincentive for such malicious actions. There is also no guarantee that the perpetrator will steal anything of value. The risk-reward ratio for physical crimes is uncomfortably high.

In the computer world, however, attacking is often much easier, far less risky, and hence much more appealing. The risks of getting attacked are, therefore, much higher. Since the Internet was designed with open connectivity as a goal and not security, it offers some unique opportunities for bad actors:

Data access. Privacy rules may be the same in the computer world but accessing data is easier. For example, collecting data on recent real estate sales can be done automatically. This can help in social engineering. Access to data & storage is cheap and easy. Networking is cheap. Robocalls are cheap. It is easy to collect, search, sort, and use data. By mining and correlating marketing data, we can target potential targets (“customers”) better. For example, one can buy data from credit databases such as Experian or Equifax.

Attacks from a distance are possible. You do not need to be physically present. Hence there is less physical danger, which allows cowards to attack. You can also be in a different state or even a different country. Networking and communications enable knowledge sharing. Only the first attacker needs to be skilled; others can use the same tools. Prosecution is difficult. Usually, there’s a high degree of anonymity.

Asymmetric power. Actors can project or harness significant force for attack. This differs dramatically from the physical world where, for example, a small nation would not risk attacking a large one that could easily overpower it. Offense can be much more effective than defense. A Distributed Denial of Service (DDoS) attack is an example of the use of asymmetric force. A company has only so many servers on the network. If an attacker can harness a large number of computers to send requests to the company’s servers, the servers will get overloaded and be unable to process legitimate requests.

A botnet is a collection of computers that have been infected with malicious software (malware). The infected computers are called zombies and their owners will often not have a clue that their computer is a part of a botnet. The software on these zombies waits for instructions from a computer known as a command and control server. The instructions may tell each zombie what additional software to download, when to run, and what systems to attack. With this botnet, attackers can launch a Distributed Denial of Service (DDoS) attack. An organization has only so many servers and only so much network capacity. A coordinated flood of requests from hundreds of thousands or millions of computers can completely overwhelm a company’s servers and disrupt availability to those who need to use the service legitimately. Attackers can create botnets themselves or, more easily, rent them as a service.

Automation enables attacks on a large scale. It is easy to cast a wide net via scripting. You can try thousands or millions of potential targets and see if any of them have security weaknesses that can you exploit. Attacks that have even minuscule rates of return or small chances of success are now profitable. This includes email scams, transferring fractional cents from bank accounts, and attempts to exploit known weaknesses.

Anonymity. Actors can act anonymously and tracking them down can be extremely difficult, if not impossible. Even today, nobody knows who launched Conflicker or many other large-scale botnets. One can attack with impunity. Trust also becomes a challenge. Do you know if you are really communicating with your bank or with an imposter?

No borders. Many countries do not control the data flow into and out of their countries. While humans have to go through border security checks, companies like Google, Facebook, and Amazon have fiber optic cables connecting their data centers throughout the world. So do Internet Service Providers (ISPs). Most countries do not force data traffic to be routed through government-controlled gateways. Notable exceptions are China, North Korea, Russia. Ethiopia. Sudan, Cuba, Bahrain, Iran, Oman, Qatar, Saudi Arabia, Syria, Turkmenistan, United Arab Emirates, Uzbekistan, Vietnam, and Belarus.

Indistinctive data. It is difficult to distinguish valid data from malicious attacks. A malicious user logging in may look the same as a legitimate user. Code is a bunch of bits and it is not always possible to tell whether it is harmful until it is executed. The network will just route packets to their desired destination.

Areas of Attack

We have looked at the consequences of threats. Let us consider some of the motivations of attackers. These mirror real-word threats.

Criminal attacks

- Fraud

- Attacks that involve deception or a breach of confidence, usually involving money but may also be used to discredit an opponent.

- Scams

- A scam is a type of fraud that usually involves money and a business transaction. The victim often pays for something and gets little or nothing in return. Pyramid schemes and fake auctions are examples of scams.

- Destruction

- Destruction is often instigated as revenge by [ex-] employees, disgruntled customers, or political adversaries. It can include disruption rather than destruction, such as Distributed Denial of Service (DDoS) attacks to make services unreachable or outright destruction of files, computers, or websites.

- Intellectual property theft

- This includes theft of an owner’s intellectual property, which can include software, product designs, unlicensed use of patents, marketing plans, business plans, etc. This includes not just theft through hacking but also the distribution of copies and unauthorized use of software, music, movies, photos, and books. A goal in security is to keep private data private. However, we sometimes want to make data publicly accessible while keeping control of how it is redistributed.

- Identity theft

- If someone can impersonate you, they can access whatever you are allowed to access: withdraw money, sell your car, log into your work account, etc.

- Brand theft

- Companies spend a lot of money building up their brand and reputation. Someone can steal the brand outright (e.g., sell an Apple phone that’s not made by Apple, or a Rolex watch) or make minor modifications to product design, packaging, or presentation 13. The goal may be to confuse customers (e.g., the fake Apple store in Kunming) or to boost the perception of the adversary’s reputation. For example, a website can advertise that it is “Norton secured” when it has not obtained a Symantec SSL Certificate or advertised itself as a Microsoft Partner (or even a Gold Certified Partner) when it is not.

Privacy Violations

Some attacks result in privacy violations. They may not be destructive or even detected by the entity that is attacked but can provide valuable data for future scams or deceptions.

- Surveillance

- Surveillance attacks include various forms of snooping on users. They can include the use of directional microphones, lasers to detect glass vibration, hidden cameras and microphones, keyloggers, GPS trackers, wide-area video surveillance, and toll collection systems.

- Databases

- Mining data is easy if it is computer-accessible. The mined data can be useful for social engineering attacks and determining where specific users are present. Free and paid databases include credit databases, health databases, political donor lists, real estate transfer, death, birth, and marriage records. Additional corporate databases contain Amazon shopping history, Netflix movies, and Facebook posts. The company Cambridge Analytica built a vast database on the psychological profiles of 230 million adult Americans via Facebook quizzes.

- Traffic analysis

- You may not see the message or be able to decrypt its contents but you can see communication patterns: who is talking to whom. This allows you to identify social circles. Governments used this to identify groups of subversives. If you were tagged as subversive, the people with whom you communicate would, at the least, be suspicious. In Secrets and Lies14, Bruce Schneier points out that “in the hours preceding the U.S. bombing of Iraq in 1991, pizza deliveries to the Pentagon increased 100-fold.”

- Large-scale surveillance

- ECHELON is a massive surveillance program led by the NSA and operated by intelligence agencies in the U.S., UK, Canada, Australia, and New Zealand. It intercepts phone calls, email, Internet downloads, and satellite transmissions. The wide scope of communication mechanisms it monitors gives it an incredible ability to identify social networks. However, the challenge with massive data gathering operations is information overload. How do you identify critical events from noise? For instance, how do you distinguish a real threat to kill the president or detonate a bomb from someone’s wishful thinking?

- Publicity attacks

- Publicity attacks were popular in the early days of the web. Hackers took pride in showing off their exploits: “Look, I ‘hacked’ into the CIA15!” These attacks still exist but lost much of their luster.

- Service attacks

- Denial of Service (DoS) attacks take systems out of service, often by saturating them with requests to render them effectively inoperative. Since it can be difficult for one machine on one network to do this, this is often done on a large scale by many widely distributed computers, leading to something known as a Distributed Denial of Service (DDoS) attack. Denial of service can also be the process of getting the target to disable the defenses they have set up. For example, if your car alarm goes off every night for apparently no good reason, you are likely to disable it, giving the attacker unfettered access.

Attack techniques

Attackers use a variety of techniques for getting their software onto target systems or for logging into those systems. Malware is an umbrella term for any form of malicious software. It includes keystroke logging, camera monitoring, content upload, and ransomware. A few of these techniques are listed below:

- Social engineering

- Social engineering is as useful in the cyber world as it is in the real world. It covers techniques of manipulating, influencing, or deceiving targets to get them to take some type of action that is not in their best interest. For example, they may inadvertently download software, plug in an infected USB device that they found on the street, or open a malicious attachment in a mail message from a “friend”. Phishing and spear phishing, described below, are two forms of social engineering that use email as a distribution medium.

- Phishing

- With phishing, an attacker sends an email that looks like it comes from a reputable organization to a broad group of people. The sender often appears to be a bank or shipping company asking you to click on a link and fill out a web form, or has a malicious attachment (e.g., one masquerading as an invoice).

- Spear Phishing

- Unlike phishing, spear phishing is a focused attack via email on a particular person or organization. It often contains some highly specific information known to the target, such as an account number or the name of a friend or colleague. It might appear to be a request from a supervisor, for example.

- File types

- Certain file types (e.g., a Microsoft Word document) cause an application to open and read the file. Malicious files can open an app and exploit vulnerabilities in the application. For example, document files or spreadsheets may execute Visual Basic programs from Microsoft Office programs.

- Web sites

- This is often a form of social engineering where a site may offer free downloads of software, books, or movies, but the downloaded content may be malicious software, or the site may exploit vulnerabilities in the web browser to either extract information from the client or credentials from the user. Additionally, reputable websites can get infected.

- Code/command injection

- Websites and other services may have coding errors that enable an attacker to provide input that will either yield more information than the program should provide or execute arbitrary commands on the server.

- Social Media

- The use of social media is not an attack but can serve as a great source of information for hackers. For example, if you post when you’re going on vacation or going to a conference, an adversary can use this data for impersonation or spear phishing attacks.

Adversaries: Know Thine Enemy

“If you know the enemy and know yourself, you need not fear the result of a hundred battles. If you know yourself but not the enemy, for every victory gained you will also suffer a defeat. If you know neither the enemy nor yourself, you will succumb in every battle.”

— Sun Tzu, The Art of War

Characteristics

We have seen that motives in computer attacks are generally similar to those in the physical world. Adversaries in the digital world also mimic those of the physical world. People are people, after all. Various factors determine what kind of person may attack a specific system. We need to know our attackers, their motivation, and their skill level to determine what kind of defense we need to mount.

Goals

There can be a wide variety of goals that cause an attacker to target a system. These mirror the attack types we just examined. The goals of an attack may be to inflict damage, financial gain, or simply get information on someone for future attacks. It is important to understand goals to know what countermeasures will be effective. Inflicting damage on a system (e.g., throw a grenade) is a totally different goal than trying to steal someone’s credit card number.

Levels of access

Insiders have access and tend to be trusted. They also have an intimate knowledge of the systems and software and tend to have a good level of expertise in navigating the systems, although generally not in attacking those systems.

Risk tolerance

Terrorists are willing to die for their cause. Career criminals will risk jail time. Publicity seekers, on the other hand, do not want to risk getting jailed.

Resources

Some adversaries operate on a tiny budget while others are extremely well-funded. With funding, one can buy computing resources, social resources (e.g., bribe someone), and expertise. Time is also a resource and some adversaries might have all the time in the world to attack a system while others either have a deadline or a time limit where it no longer makes economic sense to attack.

Expertise

Some adversaries are highly skilled while others are just poking around or using somebody else’s software to see if they can attack a system. The best adversaries are well-funded, highly-focused teams of experts in cybersecurity and penetration techniques.

Economics

All these goals distill into economics (engineering trade-offs), where a rational adversary will balance time, money, skills, risk, and likelihood of success to make a decision of whether it is worthwhile to attack a target.

Who are the adversaries?

Hackers

Hackers experiment with limitations of systems and may be good or evil. Their ultimate goal is to get to know a system better than the designers. Classic examples include phone hackers whose goal was to get free phone calls16 and lock pickers17, who work on developing skills in picking locks even if they never plan to steal anything locked by one of those locks. They hack for the thrill of breaking into systems or discovering weaknesses in protections.

Only a small percentage of hackers are truly smart and innovative. Most just follow instructions or use programs created by those smart hackers. This broader set of hackers is often referred to as “script kiddies”. The trading of hacking tools and techniques enables a large community to get a powerful arsenal for hacking. Hackers often organize into groups with distinct cultures among the various groups. Typical hackers have a lot of time but not much money. Personalities vary, of course. Some hackers are extremely risk-averse, while others are not. Regardless of intent, the activity is generally criminal. Hackers, through their discovery and sharing of techniques, often enable other adversaries to get their job done.

At the other end of the skill spectrum, hackers can also include security researchers and dedicated bug hunters. Bug hunters find bugs in systems. Exploit coders look at these bugs and try to find ways to exploit them to compromise security. The goals can be academic or malicious.

White hat hackers do not intend to cause damage and instead focus on finding exploits and notifying the authors so they can make their systems more secure. This might be done for profit (e.g., via bug bounty programs offered by Google, Apple, Tesla, and others), for recognition, or simply with the goal of trying to create a more secure world.

Black hat hackers do exactly the same work as white hat hackers: search for bugs and find ways of exploiting them. However, their goal is to profit from these exploits either directly, by hacking into the systems, or indirectly, by selling their services. Whether the end purpose is good or evil is largely immaterial. The highest bidder might be a national intelligence agency or a criminal organization.

To defend from hackers, one must look at the system from the outside as an attacker, not from the inside as a designer.

Criminals

Individuals or small groups with criminal intent form the largest group of adversaries. They are the ones who set up ATM skimmers and cameras to replicate ATM cards … or any number of other schemes. They often do not reap huge money but can be creative. The skills of this group can vary greatly, from people who send emails with malicious links or deceptive information (e.g., spam) to those with highly-targeted goals.

Malicious insiders

Insiders are among the most insidious of adversaries because they are indistinguishable from legitimate, trusted insiders. Perimeter defenses don’t work on them: they have clearance to get into the physical premises and can authenticate themselves to servers. They often have a high level of access and are trusted by the very systems that they are attacking. For example, an insider may program a payroll system to give herself a raise, turn off an alarm at a specific time, create back-door access to bypass authentication, implement a key generator for software installations, or delete log files.

Insiders are difficult to identify and stop since they have been cleared for access and, effectively, belong there. Most security defenses are designed to deal with external attackers, not internal ones. Their goals are varied. They might be seeking revenge, exposing corruption, or trying to get money or services.

Consider the popular open-source libraries colors and faker. The colors library is downloaded over 20 million times a week on average and is used in almost 19,000 projects. Faker is downloaded over 2.8 million times per week. In January 2022, the developer behind these libraries intentionally committed code to sabotage their function as an act of retaliation against corporations and commercial customers who use community software but do not give back to the community.

Industrial spies

Industrial espionage involves getting confidential information about a company. This can include finding out about new product designs, trade secrets, bids made on a project, or corporate finances. Industrial espionage may include hiring and bribing employees to reveal trade secrets, eavesdropping, or dumpster diving.

If the adversary is a competing company or a country, it may be extremely well-funded. For example, a country may want to ensure that its aerospace company wins a bid on a large aircraft contract and will try to find information about the competition. While well-funded, industrial spies are often risk-averse since the attacker’s company’s (or country’s) reputation can be damaged if it is caught spying.

Press

Reporters have a lot of incentive to be the first to get the scoop on a news item: it can drive up circulation, boost their credibility, and boost their salary. To do this may involve identifying several targets (government agencies, celebrities, companies) and spying on them in a variety of ways: social engineering, bribing, dumpster diving, tracking movements, eavesdropping, or breaking in. As with industrial spies, the press is generally risk averse as well for fear of losing one’s reputation.

Organized crime

The digital landscape offered organized crime more opportunities to make money. One can steal cell phone IDs, credit card numbers, ATM information, or bank accounts to get cash. Money laundering is a lot easier with electronic funds transfers (EFTs) and anonymous currency systems such as bitcoin.

Organized crime can spend good money — if the rewards are worth it — and can purchase expertise and access. They also have higher risk tolerance than most individuals.

Police

Police are risk averse but have the law on their side. For example, they can get search warrants and take evidence. They also are not above breaking the law via illegal wiretaps, destruction of evidence, disabling body cameras, or illegal search and seizure.

Terrorists (freedom fighters18)

Terrorists, and terrorist-like organizations, are motivated by geopolitics, religion, or their set of ethics. Example organizations include Earth First, Hezbollah, ISIS, Aryan Nations, Greenpeace, and PETA. Terrorists are usually more concerned with causing harm than with getting specific information. Although highly publicized, there are very few terrorists in the world, and, statistically, terrorist activity is scant. Terrorists usually (not always) have relatively low budgets and low skill levels.

National intelligence organizations

This includes groups such as North Korea’s General Bureau of Reconnaissance, Iran’s Cyber Defense Command, the Canadian Security Intelligence Service, the Federal Security Service of the Russian Federation (FSB), the UK Security Service (MI5), and Mossad. Within the U.S., there are 17 intelligence agencies, including the Central Intelligence Agency (CIA), National Security Agency (NSA), National Reconnaissance Office (NRO), Defense Intelligence Agency (DIA), and Federal Bureau of Investigation (FBI). Look here for a lengthy list.

These groups have large (almost unlimited, in some cases) amounts of money, large staffs, and long-term goals: maintaining a watch on activities essentially forever. They are somewhat risk averse for fear of bad public relations. More importantly, they do not want any leaks or government actions to reveal their attack techniques. For example, as soon as the Soviet Union deduced that the U.S. press was reporting information that could have only been obtained from eavesdropping on cell phone calls, all government cell phone communication became encrypted. Even though the Allies were able to break German cryptography during World War II, using too much information from cryptographic communication would have told the Germans that their messages were no longer secret. Intelligence agencies may also work with companies to ensure that the nation’s companies can underbid competitors or have every competitive technology advantage.

Due to their wealth and influence, they often have the ability to influence standards In the U.S., for example, the NSA was instrumental in the adoption of 56-bit keys for DES (instead of a longer and thus stronger key) or the purposefully flawed Dual_EC_DRBG (Dual Elliptic Curve Deterministic Random Bit Generator) that was used to generate “random” keys for elliptic curve cryptography. Lenovo computers, owned partially by the Chinese government’s Academy of Sciences, have been banned by US, Britain, Canada, Australia, and New Zealand intelligence agencies because of “malicious circuits” built into the computers 19. Edward Snowden revealed that the NSA planted backdoors into Cisco routers built for export that allow the NSA to intercept any communications through those routers. In 2019, Huawei was accused of having backdoors in its telecom equipment and routers.

Infowarriors - cyber-warfare

In times of war, nothing is safe, and it has long been speculated that warring nations will launch electronic attacks together with conventional military ones. These attacks can aim to disrupt a nation’s power grids and wreak havoc on transportation systems. They can also aim to do large-scale damage to electronic equipment in general with EMP (electromagnetic pulse) weapons. Like national intelligence organizations, attackers will have access to vast amounts of money and resources. Unlike intelligence agencies, the goals are short-term ones. There is no risk of public relations embarrassment and no need for continued surveillance.

The 2016 and 2020 U.S. presidential elections added a new twist to this arena. Disruptions need not be strikes that wipe out targets such as power grids but can instead be a steady stream of misinformation, amplification of information, and possibly blackmail that can steer public opinion. Tampering with electronic voting machines can further manipulate the political process.

In the past, there was a distinction between military and civilian systems: military systems used custom hardware, operating systems, application software, and even custom networks and protocols. That distinction has largely evaporated. Commercial technology advances too quickly and an increasing number of military and government systems use essentially the same hardware and software as civilian companies. In 2012, for example, U.S. soldiers received Android tablets and phones, running a modified version of Android. In 2016, U.S. Army Special Operations Command reported that they were replacing their Android tactical smartphone with an iPhone 6s. The report stated that the iPhone is “faster; smoother; Android freezes up and has to be restarted too often”. Bugs, with security ramifications, have become de rigueur for the military. Vulnerabilities present in commercial systems now apply to military and government systems. Moreover, governments tend to have slower refresh cycles, so there’s a higher likelihood of older systems with more documented vulnerabilities being present. These systems include routers, Wi-Fi access points, and embedded systems, all of which are likely to not be treated as “computers” and patched or upgraded on a regular basis.

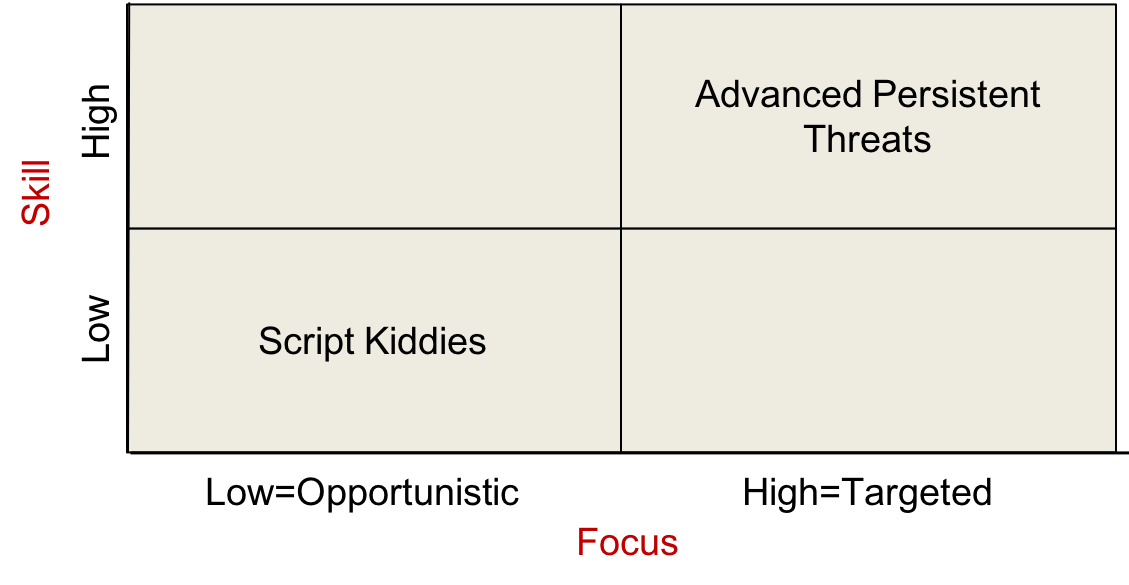

Threat matrix

We can simplify this list of adversaries into a four-quadrant threat matrix, with focus on the x-axis and skill on the y-axis.

Focus

Threats with a low focus have a broad scope. They are opportunistic. The attacker does not have any specific target in mind and hopes to cast a wide net to see what people or systems can be attacked. For instance, an attacker may scour the Internet searching for network cameras or routers with default passwords or send out an email message to a broad audience, hoping that someone will be careless enough to click on a link or take action. Opportunistic attacks are usually automated via worms and viruses. They look for – and exploit – poorly-protected services, bugs, or known login credentials. By hitting a large set of systems, the attacker hopes that even if a tiny percentage is vulnerable, there will be some wins.

Threats with a high focus are targeted. The attacker has a specific target in mind with a specific goal (extract a document, cause damage, steal money) and will customize the attack to get to that target. Spear phishing, for example, is sending an email with a malicious link but with information that requires some specific knowledge of the person being targeted. It may appear to come from a friend or co-worker or may reference known details of a bank account.

Targeted attacks require planning. This often entails gathering some intelligence about the target. For example, social engineering might be used to learn about the user or to gain an understanding of the infrastructure (system, software, and networks) that is being attacked to know where the desired assets are located and what steps one needs to take to retrieve them.

Some attacks may fall in between – not targeting arbitrary people but trying to reach specific groups. For instance, it is believed that a set of malicious websites that hacked into iPhones over a two-year period were put in place by the Chinese government to target Uyghur Muslims20.

Skill

The vast majority of attacks are performed by low-skilled players. The term script kiddies refers to someone who does not have the skills to find or write their own exploits but uses programs (scripts) created by others to attack systems. More often than not, these are not targeted attacks but rather attempts to find systems that are capable of being exploited. An example is someone downloading a malware toolkit and trying a large set of IP addresses to see if they can find any vulnerable systems.

Poorly-protected systems may still be vulnerable to attacks from script kiddies and even these attackers are thus capable of causing real damage. For example, the first successful documented cyberattack on the U.S. power grid in March of 2019 was attributed to “an automated bot that was scanning the internet for vulnerable devices, or some script kiddie.”

With increasing skill, a hacker may find their own exploits or write their own tools to implement recently found exploits. With opportunistic attacks, the goal is still to find a target – any target – that is vulnerable to the exploit.

Targeted attacks can also be performed with a low skill level, although they generally require a bit of work to identify the target and know how to achieve one’s goals. A simple low-skill targeted threat is the aforementioned spear phishing attack.

The most dangerous adversaries go by the name of advanced persistent threats (APT). This is a military term that identifies attacks that are sustained until their goal has been achieved. This term has been adopted by the computer security community to refer to organizations that perform highly-complex attacks. These groups are often intelligence agencies of nation-states or groups that are funded by the state. The attacks, called advanced targeted attacks, require knowledge of a system’s infrastructure and may require considerable time and effort to execute. The attack may require multiple steps using varying exploits to reach the goal as one may need to hop from one system to another. This is referred to as lateral movement. An APT group may be out to steal data, sabotage a utility, destroy critical infrastructure, or siphon money from a banking network. These attackers are sophisticated, goal-oriented, and well-funded.

Threat models

A threat model is the set of assumptions that we construct about the abilities and motivations of an adversary. It gives us a way to identify and prioritize potential threats while thinking from the attacker’s point of view. Attackers are not going to follow your rules. They don’t care about the policies you put in place and only need to circumvent any mechanisms that enforce those policies. Protection requires thinking about the types of attacks that could be launched against your systems and what things might possibly go wrong.

We need to assess what is valuable to us (what is worth our time and money to defend), what is valuable to the attacker (what they are likely to go after), the likelihood of an attack, what type of attackers might go after our systems (joy hackers or the NSA?), and how we can recover after an attack. For example, if ransomware cripples your organization, do you have to pay and hope that your data will be restored or can you simple reformat and reinstall your operating system and software and recover data from recent backups?

Thinking about security